presidency of the United States of America

United States government

Introduction

chief executive office of the United States. In contrast to many countries with parliamentary forms of government, where the office of president, or head of state, is mainly ceremonial, in the United States the president is vested with great authority and is arguably the most powerful elected official in the world. The nation's founders originally intended the presidency to be a narrowly restricted institution. They distrusted executive authority because their experience with colonial governors had taught them that executive power was inimical to liberty, because they felt betrayed by the actions of George III, the king of Great Britain and Ireland, and because they considered a strong executive incompatible with the republicanism embraced in the Declaration of Independence (1776). Accordingly, their revolutionary state constitutions provided for only nominal executive branches, and the Articles of Confederation (Confederation, Articles of) (1781–89), the first “national” constitution, established no executive branch. For coverage of the 2008 election, see United States Presidential Election of 2008.

chief executive office of the United States. In contrast to many countries with parliamentary forms of government, where the office of president, or head of state, is mainly ceremonial, in the United States the president is vested with great authority and is arguably the most powerful elected official in the world. The nation's founders originally intended the presidency to be a narrowly restricted institution. They distrusted executive authority because their experience with colonial governors had taught them that executive power was inimical to liberty, because they felt betrayed by the actions of George III, the king of Great Britain and Ireland, and because they considered a strong executive incompatible with the republicanism embraced in the Declaration of Independence (1776). Accordingly, their revolutionary state constitutions provided for only nominal executive branches, and the Articles of Confederation (Confederation, Articles of) (1781–89), the first “national” constitution, established no executive branch. For coverage of the 2008 election, see United States Presidential Election of 2008.Duties of the office

The Constitution succinctly defines presidential functions, powers, and responsibilities. The president's chief duty is to make sure that the laws are faithfully executed, and this duty is performed through an elaborate system of executive agencies that includes cabinet-level departments. Presidents appoint all cabinet heads and most other high-ranking officials of the executive branch of the federal government. They also nominate all judges of the federal judiciary, including the members of the Supreme Court (Supreme Court of the United States). Their appointments to executive and judicial posts must be approved by a majority of the Senate (one of the two chambers of Congress (Congress of the United States), the legislative branch of the federal government, the other being the House of Representatives (Representatives, House of)). The Senate usually confirms these appointments, though it occasionally rejects a nominee to whom a majority of members have strong objections. The president is also the commander in chief of the country's military and has unlimited authority to direct the movements of land, sea, and air forces. The president has the power to make treaties with foreign governments, though the Senate must approve such treaties by a two-thirds majority. Finally, the president has the power to approve or reject (veto) bills passed by Congress, though Congress can override the president's veto by summoning a two-thirds majority in favour of the measure.

Historical development

By the time the Constitutional Convention assembled in Philadelphia on May 25, 1787, wartime and postwar difficulties had convinced most of the delegates that an energetic national executive was necessary. They approached the problem warily, however, and a third of them favoured a proposal that would have allowed Congress to select multiple single-term executives, each of whom would be subject to recall by state governors. The subject consumed more debate at the convention than any other. The stickiest points were the method of election and the length of the executive's term. At first, delegates supported the idea that the executive should be chosen by Congress; however, congressional selection would make the executive dependent on the legislature unless the president was ineligible for reelection, and ineligibility would necessitate a dangerously long term (six or seven years was the most common suggestion).

The delegates debated the method of election until early September 1787, less than two weeks before the convention ended. Finally, the Committee on Unfinished Parts, chaired by David Brearley (Constitutional Convention) of New Jersey, put forward a cumbersome proposal—the electoral college—that overcame all objections. The system allowed state legislatures—or the voting public if the legislatures so decided—to choose electors equal in number to the states' representatives and senators combined; the electors would vote for two candidates, one of whom had to be a resident of another state. Whoever received a majority of the votes would be elected president, the runner-up vice president (vice president of the United States of America). If no one won a majority, the choice would be made by the House of Representatives, each state delegation casting one vote. The president would serve a four-year term and be eligible for continual reelection (by the Twenty-second Amendment, adopted in 1951, the president was limited to a maximum of two terms).

Until agreement on the electoral college, delegates were unwilling to entrust the executive with significant authority, and most executive powers, including the conduct of foreign relations, were held by the Senate. The delegates hastily shifted powers to the executive, and the result was ambiguous. Article II, Section 1, of the Constitution of the United States (Constitution of the United States of America) begins with a simple declarative statement: “The executive Power shall be vested in a President of the United States of America.” The phrasing can be read as a blanket grant of power, an interpretation that is buttressed when the language is compared with the qualified language of Article I: “All legislative Powers herein granted shall be vested in a Congress of the United States.”

This loose construction, however, is mitigated in two important ways. First, Article II itemizes, in sections 2 and 3, certain presidential powers, including those of commander in chief of the armed forces, appointment making, treaty making, receiving ambassadors, and calling Congress into special session. Had the first article's section been intended as an open-ended authorization, such subsequent specifications would have made no sense. Second, a sizable array of powers traditionally associated with the executive, including the power to declare war, issue letters of marque and reprisal, and coin and borrow money, were given to Congress, not the president, and the power to make appointments and treaties was shared between the president and the Senate.

The delegates could leave the subject ambiguous because of their understanding that George Washington (Washington, George) (1789–97) would be selected as the first president. They deliberately left blanks in Article II, trusting that Washington would fill in the details in a satisfactory manner. Indeed, it is safe to assert that had Washington not been available, the office might never have been created.

Postrevolutionary period

Scarcely had Washington been inaugurated when an extraconstitutional attribute of the presidency became apparent. Inherently, the presidency is dual in character. The president serves as both head of government (the nation's chief administrator) and head of state (the symbolic embodiment of the nation). Through centuries of constitutional struggle between the crown and Parliament, England had separated the two offices, vesting the prime minister with the function of running the government and leaving the ceremonial responsibilities of leadership to the monarch. The American people idolized Washington, and he played his part artfully, striking a balance between “too free an intercourse and too much familiarity,” which would reduce the dignity of the office, and “an ostentatious show” of aloofness, which would be improper in a republic.

But the problems posed by the dual nature of the office remained unsolved. A few presidents, notably Thomas Jefferson (Jefferson, Thomas) (1801–09) and Franklin D. Roosevelt (Roosevelt, Franklin D.) (1933–45), proved able to perform both roles. More common were the examples of John F. Kennedy (Kennedy, John F.) (1961–63) and Lyndon B. Johnson (Johnson, Lyndon B.) (1963–69). Although Kennedy was superb as the symbol of a vigorous nation—Americans were entranced by the image of his presidency as Camelot—he was ineffectual in getting legislation enacted. Johnson, by contrast, pushed through Congress a legislative program of major proportions, including the Civil Rights Act of 1964, but he was such a failure as a king surrogate that he chose not to run for a second term.

Washington's administration was most important for the precedents it set. For example, he retired after two terms, establishing a tradition maintained until 1940. During his first term he made the presidency a full-fledged branch of government instead of a mere office. As commander in chief during the American Revolutionary War (American Revolution), he had been accustomed to surrounding himself with trusted aides and generals and soliciting their opinions. Gathering the department heads (cabinet) together seemed a logical extension of that practice, but the Constitution authorized him only to “require the Opinion, in writing” of the department heads; taking the document literally would have precluded converting them into an advisory council. When the Supreme Court refused Washington's request for an advisory opinion on the matter of a neutrality proclamation in response to the French revolutionary and Napoleonic wars—on the ground that the court could decide only cases and not controversies—he turned at last to assembling his department heads. Cabinet meetings, as they came to be called, remained the principal instrument for conducting executive business until the late 20th century, though some early presidents, such as Andrew Jackson (Jackson, Andrew) (1829–37), made little use of the cabinet.

The Constitution also authorized the president to make treaties “by and with the Advice and Consent of the Senate,” and many thought that this clause would turn the Senate into an executive council. But when Washington appeared on the floor of the Senate to seek advice about pending negotiations with American Indian tribes, the surprised senators proved themselves to be a contentious deliberative assembly, not an advisory board. Washington was furious, and thereafter neither he nor his successors took the “advice” portion of the clause seriously. At about the same time, it was established by an act of Congress that, though the president had to seek the approval of the Senate for his major appointments, he could remove his appointees unilaterally. This power remained a subject of controversy and was central to the impeachment of Andrew Johnson (Johnson, Andrew) (1865–69) in 1868.

Washington set other important precedents, especially in foreign policy. In his Farewell Address (1796) he cautioned his successors to “steer clear of permanent alliances with any portion of the foreign world” and not to “entangle our peace and prosperity in the toils of European ambition, rivalship, interest, humor, or caprice.” His warnings laid the foundation for America's isolationist foreign policy, which lasted through most of the country's history before World War II, as well as for the Monroe Doctrine.

Perils accompanying the French revolutionary wars (French Revolution) occupied Washington's attention, as well as that of his three immediate successors. Americans were bitterly divided over the wars, some favouring Britain and its allies and others France. Political factions had already arisen over the financial policies of Washington's secretary of the treasury, Alexander Hamilton (Hamilton, Alexander), and from 1793 onward animosities stemming from the French Revolution hardened these factions into a system of political parties (political party), which the framers of the Constitution had not contemplated.

The emergence of the party system also created unanticipated problems with the method for electing the president. In 1796 John Adams (Adams, John) (1797–1801), the candidate of the Federalist Party, won the presidency and Thomas Jefferson (Jefferson, Thomas) (1801–09), the candidate of the Democratic-Republican Party, won the vice presidency; rather than working with Adams, however, Jefferson sought to undermine the administration. In 1800, to forestall the possibility of yet another divided executive, the Federalists and the Democratic-Republicans, the two leading parties of the early republic, each nominated presidential and vice presidential candidates. Because of party-line voting and the fact that electors could not indicate a presidential or vice presidential preference between the two candidates for whom they voted, the Democratic-Republican candidates, Jefferson and Aaron Burr (Burr, Aaron), received an equal number of votes. The election was thrown to the House of Representatives, and a constitutional crisis nearly ensued as the House became deadlocked. Had it remained deadlocked until the end of Adams's term on March 4, 1801, Supreme Court Chief Justice John Marshall (Marshall, John) would have become president in keeping with the existing presidential succession act. On February 17, 1801, Jefferson was finally chosen president by the House, and with the ratification of the Twelfth Amendment, beginning in 1804, electors were required to cast separate ballots for president and vice president.

The presidency in the 19th century

Jefferson (Jefferson, Thomas) shaped the presidency almost as much as did Washington. He altered the style of the office, departing from Washington's austere dignity so far as to receive foreign ministers in run-down slippers and frayed jackets. He shunned display, protocol, and pomp; he gave no public balls or celebrations on his birthday. By completing the transition to republicanism, he humanized the presidency and made it a symbol not of the nation but of the people. He talked persuasively about the virtue of limiting government—his first inaugural address was a masterpiece on the subject—and he made gestures in that direction. He slashed the army and navy, reduced the public debt, and ended what he regarded as the “monarchical” practice of addressing Congress in person. But he also stretched the powers of the presidency in a variety of ways. While maintaining a posture of deference toward Congress, he managed legislation more effectively than any other president of the 19th century. He approved the Louisiana Purchase despite his private conviction that it was unconstitutional. He conducted a lengthy and successful war against the Barbary pirates (Barbary pirate) of North Africa without seeking a formal declaration of war from Congress. He used the army against the interests of the American people in his efforts to enforce an embargo that was intended to compel Britain and France to respect America's rights as a neutral during the Napoleonic wars and ultimately to bring those two countries to the peace table. In 1810 Jefferson wrote in a letter that circumstances “sometimes occur” when “officers of high trust” must “assume authorities beyond the law” in keeping with the “salus populi…, the laws of necessity, of self-preservation, of saving our country when in danger.” On those occasions “a scrupulous adherence to written law, would be to lose the law itself…thus absurdly sacrificing the end to the means.”

From Jefferson's departure until the end of the century, the presidency was perceived as an essentially passive institution. Only three presidents during that long span acted with great energy, and each elicited a vehement congressional reaction. Andrew Jackson (Jackson, Andrew) exercised the veto flamboyantly; attempted, in the so-called Bank War, to undermine the Bank of the United States by removing federal deposits; and sought to mobilize the army against South Carolina when that state adopted an Ordinance of nullification declaring the federal tariffs of 1828 and 1832 to be null and void within its boundaries. By the time his term ended, the Senate had censured him and refused to receive his messages. (When Democrats (Democratic Party) regained control of the Senate from the Whigs (Whig Party), Jackson's censure was expunged.) James K. Polk (Polk, James K.) (1845–49) maneuvered the United States into the Mexican War (Mexican-American War) and only later sought a formal congressional declaration. When he asserted that “a state of war exists” with Mexico, Senator John C. Calhoun (Calhoun, John C) of South Carolina launched a tirade against him, insisting that a state of war could not exist unless Congress declared one. The third strong president during the period, Abraham Lincoln (Lincoln, Abraham) (1861–65), defending the salus populi in Jeffersonian fashion, ran roughshod over the Constitution during the American Civil War. Radical Republican congressmen were, at the time of his assassination, sharpening their knives in opposition to his plans for reconstructing the rebellious Southern states, and they wielded them to devastating effect against his successor, Andrew Johnson (Johnson, Andrew). They reduced the presidency to a cipher, demonstrating that Congress can be more powerful than the president if it acts with complete unity. Johnson was impeached on several grounds, including his violation of the Tenure of Office Act, which forbade the president from removing civil officers without the consent of the Senate. Although Johnson was not convicted, he and the presidency were weakened.

Contributing to the weakness of the presidency after 1824 was the use of national conventions rather than congressional caucuses to nominate presidential candidates (see below The convention system (presidency of the United States of America)). The new system existed primarily as a means of winning national elections and dividing the spoils of victory, and the principal function of the president became the distribution of government jobs.

Changes in the 20th century

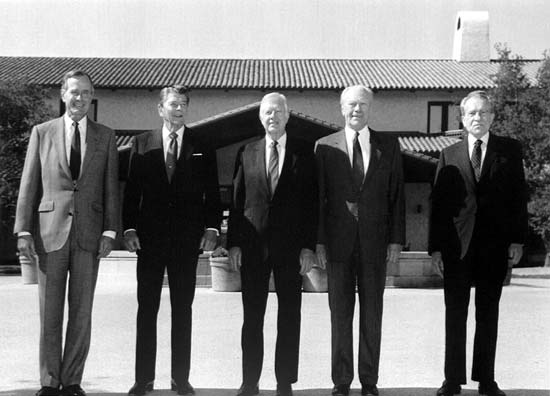

In the 20th century the powers and responsibilities of the presidency were transformed. President Theodore Roosevelt (Roosevelt, Theodore) (1901–09) regarded the presidency as a “bully pulpit” from which to preach morality and rally his fellow citizens against “malefactors of great wealth,” and he wheedled from Congress a generous fund for railroad travel to put his pulpit on wheels. Other presidents followed Roosevelt's example, with varying results. Woodrow Wilson (Wilson, Woodrow) (1913–21) led the United States into World War I to make the world “safe for democracy,” though he failed to win congressional approval for American membership in the League of Nations (Nations, League of). Franklin D. Roosevelt was the first president to use the medium of radio effectively, and he raised the country's morale dramatically during the Great Depression. Ronald Reagan (Reagan, Ronald W.) (1981–89), known as the “Great Communicator,” employed televised addresses and other appearances to restore the nation's self-confidence and commit it to struggling against the Soviet Union, which he referred to as an “evil empire.”

Theodore Roosevelt also introduced the practice of issuing substantive executive orders. Although the Supreme Court ruled that such orders had the force of law only if they were justified by the Constitution or authorized by Congress, in practice they covered a wide range of regulatory activity. By the early 21st century some 50,000 executive orders had been issued. Roosevelt also used executive agreements—direct personal pacts with other chief executives—as an alternative to treaties. The Supreme Court's ruling in U.S. v. Belmont (1937) that such agreements had the constitutional force of a treaty greatly enhanced the president's power in the conduct of foreign relations.

Woodrow Wilson introduced the notion of the president as legislator in chief. Although he thought of himself as a Jeffersonian advocate of limited government, he considered the British parliamentary system to be superior to the American system, and he abandoned Jefferson's precedent by addressing Congress in person, drafting and introducing legislation, and employing pressure to bring about its enactment.

Franklin D. Roosevelt (Roosevelt, Franklin D.) completed the transformation of the presidency. In the midst of the Great Depression, Congress granted him unprecedented powers, and when it declined to give him the powers he wanted, he simply assumed them; after 1937 the Supreme Court acquiesced to the changes. Equally important was the fact that the popular perception of the presidency had changed; people looked to the president for solutions to all their problems, even in areas quite beyond the capacity of government at any level. Everything good that happened was attributed to the president's benign will, everything bad to wicked advisers or opponents. Presidential power remained at unprecedented levels from the 1950s to the mid-1970s, when Richard Nixon (Nixon, Richard M.) (1969–74) was forced to resign the office because of his role in the Watergate Scandal. The Watergate affair greatly increased public cynicism about politics and elected officials, and it inspired legislative attempts to curb executive power in the 1970s and '80s.

Several developments since the end of World War II have tended to make the president's job more difficult. After Roosevelt died and Republicans gained a majority in Congress, the Twenty-second Amendment, which limits presidents to two terms of office, was adopted in 1951. Two decades later, reacting to perceived abuses by Presidents Lyndon Johnson and Richard Nixon, Congress passed the Budget and Impoundment Control Act to reassert its control over the budget; the act imposed constraints on impoundments, created the Congressional Budget Office, and established a timetable for passing budget bills. In 1973, in the midst of the Vietnam War, Congress overrode Nixon's veto of the War Powers Act, which attempted to reassert Congress's constitutional war-making authority by subjecting future military ventures to congressional review. Subsequent presidents, however, contended that the resolution was unconstitutional and generally ignored it. Confrontations over the constitutional limits of presidential authority became more frequent in the 1980s and '90s, when the presidency and Congress were commonly controlled by different parties, which led to stalemate and a virtual paralysis of government.

One challenge facing presidents beginning in the late 20th century was the lack of reliable sources of information. Franklin D. Roosevelt could depend on local party bosses for accurate grassroots data, but the presidents of later generations had no such resource. Every person or group seeking the president's attention had special interest (interest group)s to plead, and misinformation and disinformation were rife. Moreover, the burgeoning of the executive bureaucracy created filters that limited or distorted the information flowing to the president and his staff. Public opinion polls, on which presidents increasingly depended, were often biased and misleading. Another problem, which resulted from the proliferation of presidential primaries after 1968 and the extensive use of political advertising on television, was the high cost of presidential campaigns and the consequent increase in the influence of special interest groups (see below The money game (presidency of the United States of America)).

At the start of the 21st century, presidential power, while nominally still enormous, was institutionally bogged down by congressional reforms and the changing relationship between the presidency and other institutional and noninstitutional actors. Moreover, the end of the Cold War shattered the long-standing bipartisan consensus on foreign policy and revived tensions between the executive and legislative branches over the extent of executive war-making power. The presidency also had become vulnerable again as a result of scandals and impeachment during the second term of Bill Clinton (Clinton, Bill) (1993–2001), and it seemed likely to be weakened even further by the bitter controversy surrounding the 2000 presidential election, in which Republican George W. Bush (Bush, George W.) (2001– ) lost the popular vote but narrowly defeated the Democratic candidate, Vice President Al Gore (Gore, Al), in the electoral college after the U.S. Supreme Court ordered a halt to the manual recounting of disputed ballots in Florida. It is conceivable, however, that this trend was welcomed by the public. For as opinion polls consistently showed, though Americans liked strong, activist presidents, they also distrusted and feared them.

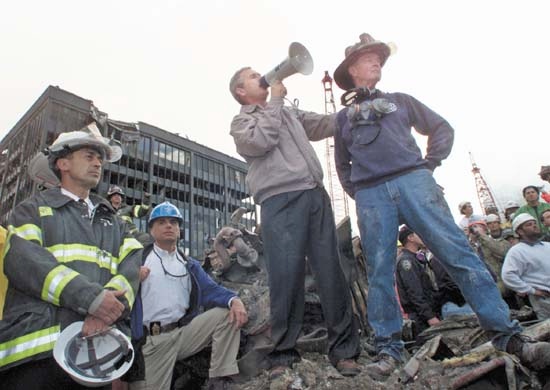

At the start of the 21st century, presidential power, while nominally still enormous, was institutionally bogged down by congressional reforms and the changing relationship between the presidency and other institutional and noninstitutional actors. Moreover, the end of the Cold War shattered the long-standing bipartisan consensus on foreign policy and revived tensions between the executive and legislative branches over the extent of executive war-making power. The presidency also had become vulnerable again as a result of scandals and impeachment during the second term of Bill Clinton (Clinton, Bill) (1993–2001), and it seemed likely to be weakened even further by the bitter controversy surrounding the 2000 presidential election, in which Republican George W. Bush (Bush, George W.) (2001– ) lost the popular vote but narrowly defeated the Democratic candidate, Vice President Al Gore (Gore, Al), in the electoral college after the U.S. Supreme Court ordered a halt to the manual recounting of disputed ballots in Florida. It is conceivable, however, that this trend was welcomed by the public. For as opinion polls consistently showed, though Americans liked strong, activist presidents, they also distrusted and feared them.That division of sentiment was exacerbated by events during the administration of George W. Bush. The September 11 attacks of 2001, which stunned and horrified Americans, prompted Bush to launch what he labeled a “global war on terror.” A majority of Americans supported the subsequent U.S. attack on Afghanistan, whose Taliban regime had been accused of harbouring of al-Qaeda (Qaeda, al-), the terrorist organization responsible for the September 11 attacks. In 2002 the administration shifted its attention to Iraq (Iraq War), charging the government of Ṣaddām Ḥussein with possessing and actively developing weapons of mass destruction (weapon of mass destruction) (WMD) and with having ties to terrorist groups, including al-Qaeda. The U.S.-led invasion of Iraq in 2003 quickly toppled Ṣaddām but failed to uncover any WMD, prompting critics to charge the administration had misled the country into war. Meanwhile, many Americans watched with anxiety as an insurgency intensified against U.S. troops and the Iraqi regime. The subsequent presidential election campaign of 2004, the first in more than 30 years to be conducted during wartime, was marked by an intense acrimony between Bush supporters and opponents that continued after Bush's reelection. As Bush declared the spread of democracy (particularly in the Middle East) to be an important goal of his second term, the institution of the presidency seemed once again to be tied to the Wilsonian premise that the role of the United States was to make the world safe for democracy.

Selecting a president

Although the framers of the Constitution established a system for electing the president—the electoral college—they did not devise a method for nominating presidential candidates or even for choosing electors. They assumed that the selection process as a whole would be nonpartisan and devoid of factions (or political parties), which they believed were always a corrupting influence in politics. The original process worked well in the early years of the republic, when Washington, who was not affiliated closely with any faction, was the unanimous choice of electors in both 1789 and 1792. However, the rapid development of political parties soon presented a major challenge, one that led to changes that would make presidential elections more partisan but ultimately more democratic.

Although the framers of the Constitution established a system for electing the president—the electoral college—they did not devise a method for nominating presidential candidates or even for choosing electors. They assumed that the selection process as a whole would be nonpartisan and devoid of factions (or political parties), which they believed were always a corrupting influence in politics. The original process worked well in the early years of the republic, when Washington, who was not affiliated closely with any faction, was the unanimous choice of electors in both 1789 and 1792. However, the rapid development of political parties soon presented a major challenge, one that led to changes that would make presidential elections more partisan but ultimately more democratic.The practical and constitutional inadequacies of the original electoral college system became evident in the election of 1800, when the two Democratic-Republican candidates, Jefferson and Burr, received an equal number of electoral votes and thereby left the presidential election to be decided by the House of Representatives. The Twelfth Amendment (1804), which required electors to vote for president and vice president separately, remedied this constitutional defect.

Because each state was free to devise its own system of choosing electors, disparate methods initially emerged. In some states electors were appointed by the legislature, in others they were popularly elected, and in still others a mixed approach was used. In the first presidential election, in 1789, four states (Delaware, Maryland, Pennsylvania, and Virginia) used systems based on popular election. Popular election gradually replaced legislative appointment, the most common method through the 1790s, until by the 1830s all states except South Carolina chose electors by direct popular vote. See also Sidebar: Keys to the White House.

The evolution of the nomination process

“King Caucus”

While popular voting was transforming the electoral college system, there were also dramatic shifts in the method for nominating presidential candidates. There being no consensus on a successor to Washington upon his retirement after two terms as president, the newly formed political parties quickly asserted control over the process. Beginning in 1796, caucuses (caucus) of the parties' congressional delegations met informally to nominate their presidential and vice presidential candidates, leaving the general public with no direct input. The subsequent demise in the 1810s of the Federalist Party, which failed even to nominate a presidential candidate in 1820, made nomination by the Democratic-Republican caucus tantamount to election as president. This early nomination system—dubbed “King Caucus” by its critics—evoked widespread resentment, even from some members of the Democratic-Republican caucus. By 1824 it had fallen into such disrepute that only one-fourth of the Democratic-Republican congressional delegation took part in the caucus that nominated Secretary of the Treasury William Crawford (Crawford, William H) instead of more popular figures such as John Quincy Adams (Adams, John Quincy) and Andrew Jackson (Jackson, Andrew). Jackson, Adams, and Henry Clay (Clay, Henry) eventually joined Crawford in contesting the subsequent presidential election, in which Jackson received the most popular and electoral votes but was denied the presidency by the House of Representatives (which selected Adams) after he failed to win the required majority in the electoral college. Jackson, who was particularly enraged following Adams's appointment of Clay as secretary of state, called unsuccessfully for the abolition of the electoral college, but he would get his revenge by defeating Adams in the presidential election of 1828.

The convention (political convention) system

In a saloon in Baltimore, Maryland, in 1832, Jackson's Democratic Party held one of the country's first national conventions (the first such convention had been held the previous year—in the same saloon—by the Anti-Masonic Party). The Democrats nominated Jackson as their presidential candidate and Martin Van Buren (Van Buren, Martin) as his running mate and drafted a party platform (see political convention). It was assumed that open and public conventions would be more democratic, but they soon came under the control of small groups of state and local party leaders, who handpicked many of the delegates. The conventions were often tense affairs, and sometimes multiple ballots were needed to overcome party divisions—particularly at conventions of the Democratic Party, which required its presidential and vice presidential (vice president of the United States of America) nominees to secure the support of two-thirds of the delegates (a rule that was abolished in 1936).

In a saloon in Baltimore, Maryland, in 1832, Jackson's Democratic Party held one of the country's first national conventions (the first such convention had been held the previous year—in the same saloon—by the Anti-Masonic Party). The Democrats nominated Jackson as their presidential candidate and Martin Van Buren (Van Buren, Martin) as his running mate and drafted a party platform (see political convention). It was assumed that open and public conventions would be more democratic, but they soon came under the control of small groups of state and local party leaders, who handpicked many of the delegates. The conventions were often tense affairs, and sometimes multiple ballots were needed to overcome party divisions—particularly at conventions of the Democratic Party, which required its presidential and vice presidential (vice president of the United States of America) nominees to secure the support of two-thirds of the delegates (a rule that was abolished in 1936). The convention system was unaltered until the beginning of the 20th century, when general disaffection with elitism led to the growth of the Progressive movement and the introduction in some states of binding presidential primary elections, which gave rank-and-file party members more control over the delegate-selection process. By 1916 some 20 states were using primaries, though in subsequent decades several states abolished them. From 1932 to 1968 the number of states holding presidential primaries was fairly constant (between 12 and 19), and presidential nominations remained the province of convention delegates and party bosses rather than of voters. Indeed, in 1952 Democratic convention delegates selected Adlai Stevenson (Stevenson, Adlai E) as the party's nominee though Estes Kefauver had won more than three-fifths of the votes in that year's presidential primaries. In 1968, at a raucous convention in Chicago that was marred by violence on the city's streets and chaos in the convention hall, Vice President Hubert Humphrey (Humphrey, Hubert H) captured the Democratic Party's presidential nomination despite his not having contested a single primary.

The convention system was unaltered until the beginning of the 20th century, when general disaffection with elitism led to the growth of the Progressive movement and the introduction in some states of binding presidential primary elections, which gave rank-and-file party members more control over the delegate-selection process. By 1916 some 20 states were using primaries, though in subsequent decades several states abolished them. From 1932 to 1968 the number of states holding presidential primaries was fairly constant (between 12 and 19), and presidential nominations remained the province of convention delegates and party bosses rather than of voters. Indeed, in 1952 Democratic convention delegates selected Adlai Stevenson (Stevenson, Adlai E) as the party's nominee though Estes Kefauver had won more than three-fifths of the votes in that year's presidential primaries. In 1968, at a raucous convention in Chicago that was marred by violence on the city's streets and chaos in the convention hall, Vice President Hubert Humphrey (Humphrey, Hubert H) captured the Democratic Party's presidential nomination despite his not having contested a single primary.Post-1968 reforms

To unify the Democratic Party, Humphrey appointed a committee that proposed reforms that later fundamentally altered the nomination process for both major national parties. The reforms introduced a largely primary-based system that reduced the importance of the national party conventions. Although the presidential and vice presidential candidates of both the Democratic Party and the Republican Party are still formally selected by national conventions, most of the delegates are selected through primaries—or, in a minority of states, through caucuses—and the delegates gather merely to ratify the choice of the voters.

The modern nomination process

Deciding to run

Although there are few constitutional requirements for the office of the presidency—presidents must be natural-born citizens, at least 35 years of age, and residents of the United States for at least 14 years—there are considerable informal barriers. No woman or ethnic minority has yet been elected president, and all presidents but one have been Protestants (John F. Kennedy was the only Roman Catholic to occupy the office). Successful presidential candidates generally have followed one of two paths to the White House: from prior elected office (some four-fifths of presidents have been members of the U.S. Congress or state governors) or from distinguished service in the military (e.g., Washington, Jackson, and Dwight D. Eisenhower (Eisenhower, Dwight D.) 【1953–61】).

The decision to become a candidate for president is often a difficult one, in part because candidates and their families must endure intensive scrutiny of their entire public and private lives by the news media. Before officially entering the race, prospective candidates usually organize an exploratory committee to assess their political viability. They also travel the country extensively to raise money and to generate grassroots support and favourable media exposure. Those who ultimately opt to run have been described by scholars as risk takers who have a great deal of confidence in their ability to inspire the public and to handle the rigours of the office they seek.

The money game

Political campaigns in the United States are expensive—and none more so than those for the presidency. Presidential candidates generally need to raise tens of millions of dollars to compete for their party's nomination. Even candidates facing no internal party opposition, such as incumbent presidents Bill Clinton in 1996 and George W. Bush in 2004, raise enormous sums to dissuade prospective candidates from entering the race and to campaign against their likely opponent in the general election before either party has officially nominated a candidate. Long before the first vote is cast, candidates spend much of their time fund-raising, a fact that has prompted many political analysts to claim that in reality the so-called “money primary” is the first contest in the presidential nomination process. Indeed, much of the early media coverage of a presidential campaign focuses on fund-raising, particularly at the end of each quarter, when the candidates are required to file financial reports with the Federal Election Commission (FEC). Candidates who are unable to raise sufficient funds often drop out before the balloting has begun.

In the 1970s legislation regulating campaign contributions and expenditures was enacted to address increasing concerns that the largely private funding of presidential elections enabled large contributors to gain unfair influence over a president's policies and legislative agenda. Presidential candidates who agree to limit their expenditures in the primaries and caucuses to a fixed overall amount are eligible for federal matching funds, which are collected through a taxpayer “check-off” system that allows individuals to contribute a portion of their federal income tax to the Presidential Election Campaign Fund. To become eligible for such funds, candidates are required to raise a minimum of $5,000 in at least 20 states (only the first $250 of each contribution counts toward the $5,000); they then receive from the FEC a sum equivalent to the first $250 of each individual contribution (or a fraction thereof if there is a shortfall in the fund). Candidates opting to forgo federal matching funds for the primaries and caucuses, such as George W. Bush in 2000 and 2004, John Kerry in 2004, and self-financed candidate Steve Forbes (Forbes, Steve) in 1996, are not subject to spending limits. From 1976 through 2000, candidates could collect from individuals a maximum contribution of $1,000, a sum subsequently raised to $2,000 and indexed for inflation by the Bipartisan Campaign Reform Act of 2002 (the figure was $2,300 for the 2008 presidential election).

Despite these reforms, money continues to exert a considerable influence in the nomination process and in presidential elections. Although prolific fund-raising by itself is not sufficient for winning the Democratic or Republican nominations or for being elected president, it is certainly necessary.

The primary and caucus season

Most delegates to the national conventions of the Democratic and Republican parties are selected through primaries or caucuses and are pledged to support a particular candidate. Each state party determines the date of its primary or caucus. Historically, Iowa held its caucus in mid-February, followed a week later by a primary in New Hampshire; the campaign season then ran through early June, when primaries were held in states such as New Jersey and California. Winning in either Iowa or New Hampshire—or at least doing better than expected there—often boosted a campaign, while faring poorly sometimes led candidates to withdraw. Accordingly, candidates often spent years organizing grassroots support in these states. In 1976 such a strategy in Iowa propelled Jimmy Carter (Carter, Jimmy) (1977–81), then a relatively unknown governor from Georgia, to the Democratic nomination and the presidency.

Because of criticism that Iowa and New Hampshire were unrepresentative of the country and exerted too much influence in the nomination process, several other states began to schedule their primaries earlier. In 1988, for example, 16 largely Southern states moved their primaries to a day in early March that became known as “Super Tuesday.” Such “front-loading” of primaries and caucuses continued during the 1990s, prompting Iowa and New Hampshire to schedule their contests even earlier, in January, and causing the Democratic Party to adopt rules to protect the privileged status of the two states. By 2008 some 40 states had scheduled their primaries or caucuses for January or February; few primaries or caucuses are now held in May or June. For the 2008 campaign, several states attempted to blunt the influence of Iowa and New Hampshire by moving their primaries and caucuses to January, forcing Iowa to hold its caucus on January 3 and New Hampshire its primary on January 8. Some states, however, scheduled primaries earlier than the calendar sanctioned by the Democratic and Republican National Committees, and, as a result, both parties either reduced or, in the case of the Democrats, stripped states violating party rules of their delegates to the national convention. For example, Michigan and Florida held their primaries on Jan. 15 and Jan. 29, 2008, respectively; both states were stripped of half their Republican and all their Democratic delegates to the national convention. Front-loading has severely truncated the campaign season, requiring candidates to raise more money sooner and making it more difficult for lesser-known candidates to gain momentum by doing well in early primaries and caucuses.

Presidential nominating conventions

One important consequence of the front-loading of primaries is that the nominees of both major parties are now usually determined by March or April. To secure a party's nomination, a candidate must win the votes of a majority of the delegates attending the convention. (More than 4,000 delegates attend the Democratic convention, while the Republican (Republican Party) convention usually comprises some 2,500 delegates.) In most Republican primaries the candidate who wins the statewide popular vote is awarded all the state's delegates. By contrast, the Democratic Party requires that delegates be allocated proportionally to each candidate who wins at least 15 percent of the popular vote. It thus takes Democratic candidates longer than Republican candidates to amass the required majority. In 1984 the Democratic Party created a category of “superdelegates,” who are unpledged to any candidate. Consisting of federal officeholders, governors, and other high-ranking party officials, they usually constitute 15 to 20 percent of the total number of delegates. Other Democratic delegates are required on the first ballot to vote for the candidate whom they are pledged to support, unless that candidate has withdrawn from consideration. If no candidate receives a first-ballot majority, the convention becomes open to bargaining, and all delegates are free to support any candidate. The last convention to require a second ballot was held in 1952, before the advent of the primary system.

The Democratic and Republican nominating conventions are held during the summer prior to the November general election and are publicly funded through the taxpayer check-off system. (The party that holds the presidency usually holds its convention second.) Shortly before the convention, the presidential candidate selects a vice presidential running mate, often to balance the ticket ideologically or geographically or to shore up one or more of the candidate's perceived weaknesses.

In the early days of television, the conventions were media spectacles and were covered by the major commercial networks gavel to gavel. As the importance of the conventions declined, however, so too did the media coverage of them. Nevertheless, the conventions are still considered vital. It is at the conventions that the parties draft their platforms, which set out the policies of each party and its presidential candidate. The convention also serves to unify each party after what may have been a bitter primary season. Finally, the conventions mark the formal start of the general election campaign (because the nominees do not receive federal money until they have been formally chosen by the convention delegates), and they provide the candidates with a large national audience and an opportunity to explain their agendas to the American public.

The general election campaign

Although the traditional starting date of the general election campaign is Labor Day (the first Monday in September), in practice the campaign begins much earlier, because the nominees are known long before the national conventions. Like primary campaigns and the national conventions, the general election campaign is publicly funded through the taxpayer check-off system. Since public financing was introduced in the 1970s, all Democratic and Republican candidates have opted to receive federal matching funds for the general election; in exchange for such funds, they agree to limit their spending to an amount equal to the federal matching funds they receive plus a maximum personal contribution of $50,000. By 2004 each major party nominee received some $75 million. In 2008 Democratic nominee Barack Obama (Obama, Barack) became the first candidate to opt out of public financing for both the primary and the general election campaign; he raised more than $650 million.

Minor party presidential candidates face formidable barriers. Whereas Democratic and Republican presidential candidates automatically are listed first and second on general election ballots, minor party candidates must navigate the complex and varied state laws to gain ballot access. In addition, a new party is eligible for federal financing in an election only if it received at least 5 percent of the vote in the previous election. All parties that receive at least 25 percent of the vote in the prior presidential election are entitled to equivalent public funding.

A candidate's general election strategy is largely dictated by the electoral college system. All states except Maine and Nebraska follow the unit rule, by which all of a state's electoral votes are awarded to the candidate who receives the most popular votes in that state. Candidates therefore focus their resources and time on large states and states that are considered toss-ups, and they tend to ignore states that are considered safe for one party or the other and states with few electoral votes.

Modern presidential campaigns are media driven, as candidates spend millions of dollars on television advertising and on staged public events (photo ops) designed to generate favourable media coverage. The most widely viewed campaign spectacles are the debates (debate) between the Democratic and Republican presidential and vice presidential candidates (minor parties are often excluded from such debates, a fact cited by critics who contend that the current electoral process is undemocratic and inimical to viewpoints other than those of the two major parties). First televised in 1960, such debates have been a staple of the presidential campaign since 1976. They are closely analyzed in the media and sometimes result in a shift of public opinion in favour of the candidate who is perceived to be the winner or who is seen as more attractive or personable by most viewers. (Some analysts have argued, for example, that John F. Kennedy's relaxed and self-confident manner, as well as his good looks, aided him in his debate with Richard Nixon and contributed to his narrow victory in the presidential election of 1960.) Because of the potential impact and the enormous audience of the debates—some 80 million people watched the single debate between Jimmy Carter and Ronald Reagan in 1980—the campaigns usually undertake intensive negotiations over the number of debates as well as their rules and format.

Modern presidential campaigns are media driven, as candidates spend millions of dollars on television advertising and on staged public events (photo ops) designed to generate favourable media coverage. The most widely viewed campaign spectacles are the debates (debate) between the Democratic and Republican presidential and vice presidential candidates (minor parties are often excluded from such debates, a fact cited by critics who contend that the current electoral process is undemocratic and inimical to viewpoints other than those of the two major parties). First televised in 1960, such debates have been a staple of the presidential campaign since 1976. They are closely analyzed in the media and sometimes result in a shift of public opinion in favour of the candidate who is perceived to be the winner or who is seen as more attractive or personable by most viewers. (Some analysts have argued, for example, that John F. Kennedy's relaxed and self-confident manner, as well as his good looks, aided him in his debate with Richard Nixon and contributed to his narrow victory in the presidential election of 1960.) Because of the potential impact and the enormous audience of the debates—some 80 million people watched the single debate between Jimmy Carter and Ronald Reagan in 1980—the campaigns usually undertake intensive negotiations over the number of debates as well as their rules and format.The presidential election is held on the Tuesday following the first Monday in November. Voters do not actually vote for presidential and vice presidential candidates but rather vote for electors pledged to a particular candidate. Only on rare occasions, such as the disputed presidential election in 2000 between Al Gore and George W. Bush, is it not clear on election day (or the following morning) who won the presidency. Although it is possible for the candidate who has received the most popular votes to lose the electoral vote (as also occurred in 2000), such inversions are infrequent. The electors gather in their respective state capitals to cast their votes on the Monday following the second Wednesday in December, and the results are formally ratified by Congress in early January.

Upon winning the election, a nonincumbent president-elect appoints a transition team to effect a smooth transfer of power between the incoming and outgoing administrations. The formal swearing-in ceremony and inauguration of the new president occurs on January 20 in Washington, D.C. The chief justice of the United States administers the formal oath of office to the president-elect: “I do solemnly swear (or affirm) that I will faithfully execute the office of President of the United States, and will to the best of my ability, preserve, protect and defend the Constitution of the United States.” The new president's first speech, called the Inaugural Address, is then delivered to the nation.

Presidents of the United States

Presidents of the United States Presidents of the United StatesThe table provides a list of U.S. presidents.

United States presidential election results

U.S. presidential election results U.S. presidential election resultsThe table provides a list of U.S. electoral college results.

Additional Reading

General studies, organized historically, include Forrest McDonald, The American Presidency: An Intellectual History (1994); Sidney M. Milkis and Michael Nelson, The American Presidency: Origins and Developments, 1776–2002, 4th ed. (2003); and a classic earlier work, Edward S. Corwin, The President: Office and Powers, 1787–1957, 4th rev. ed. (1957).Analyses of the office by its functions are Thomas E. Cronin, The State of the Presidency, 2nd ed. (1980); Louis W. Koenig, The Chief Executive, 6th ed. (1996); and Richard M. Pious, The American Presidency (1979).A wide-ranging study by a variety of specialists is Thomas E. Cronin (ed.), Inventing the American Presidency (1989). An important work whose approach is indicated by its title is Arthur M. Schlesinger, Jr., The Imperial Presidency (1973, reissued 1998). Crucial to understanding the inner dynamics of the office is Richard E. Neustadt, Presidential Power and the Modern Presidents, rev. ed. (1990).The presidential selection process and campaigns are examined in Stephen J. Wayne, The Road to the White House, 2004: The Politics of Presidential Elections (2004); Nelson W. Polsby and Aaron B. Wildavsky, Presidential Elections: Strategies and Structures of American Politics, 11th. ed. (2004); Paul F. Boller, Jr., Presidential Campaigns: From George Washington to George W. Bush, 2nd rev. ed. (2004); and Kathleen Hall Jamieson, Packaging the Presidency: A History and Criticism of Presidential Campaign Advertising, 3rd ed. (1996). Presidential debates are comprehensively examined in Alan Schroeder, Presidential Debates: Forty Years of High-Risk TV (2000).For individual presidencies, the best works are those in the American Presidency Series published by the University Press of Kansas. The leading scholarly journal in the field is Presidential Studies Quarterly. Ed.

- fescue

- Fessenden, Reginald Aubrey

- Fessenden, William Pitt

- Festus, Sextus Pompeius

- feta

- Fet, Afanasy Afanasyevich

- fetal alcohol syndrome

- fetch

- Fethiye

- fetial

- fetishism

- Fetisov, Vyacheslav

- Fetter, Frank Albert

- Fetti, Domenico

- fetus

- Feuchtwanger, Lion

- Feuchères, Sophie Dawes, Baroness de

- feud

- feudalism

- feudal land tenure

- Feuerbach, Anselm

- Feuerbach, Ludwig

- Feuerbach, Paul, knight von

- Feuillade, Louis

- Feuillants, Club of the